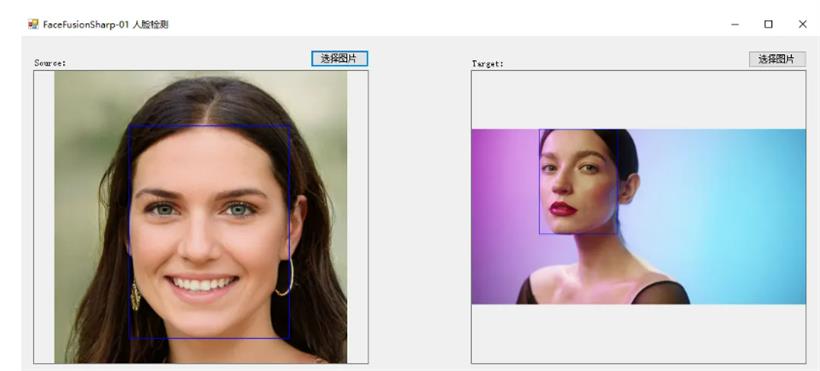

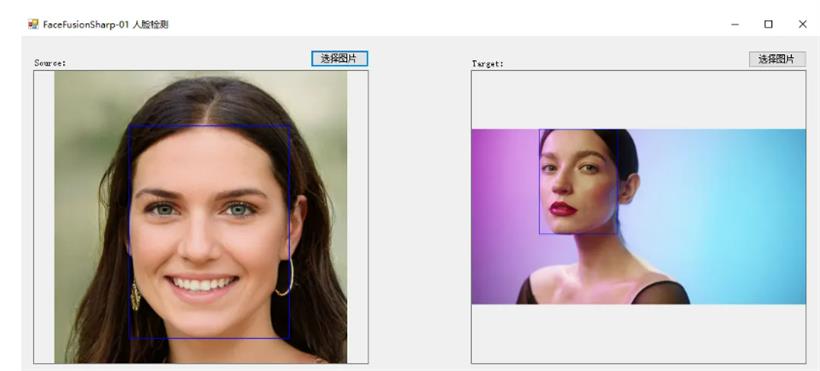

先看效果

人脸检测

说明

说明

C#版Facefusion一共有如下5个步骤:

1、使用yoloface_8n.onnx进行人脸检测

2、使用2dfan4.onnx获取人脸关键点

3、使用arcface_w600k_r50.onnx获取人脸特征值

4、使用inswapper_128.onnx进行人脸交换

5、使用gfpgan_1.4.onnx进行人脸增强

本文分享使用yoloface_8n.onnx实现C#版Facefusion第一步:人脸检测。

顺便再看一下C++代码的实现方式,可以对比学习。

模型信息

Model Properties

-------------------------

date:2024-01-13T23:32:01.523479

description:Ultralytics YOLOv8n-pose model trained on /ssd2t/derron/ultralytics/ultralytics/datasets/widerface.yaml

author:Ultralytics

kpt_shape:[5, 3]

task:pose

license:AGPL-3.0 https://ultralytics.com/license

version:8.1.0

stride:32

batch:1

imgsz:[640, 640]

names:{0: 'face'}

---------------------------------------------------------------

Inputs

-------------------------

name:images

tensor:Float[1, 3, 640, 640]

---------------------------------------------------------------

Outputs

-------------------------

name:output0

tensor:Float[1, 20, 8400]

---------------------------------------------------------------

项目

代码

代码

调用代码

using OpenCvSharp;

using OpenCvSharp.Extensions;

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Windows.Forms;

namespace FaceFusionSharp

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

string source_path = "";

string target_path = "";

Yolov8Face detect_face;

private void button2_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox1.Image = null;

source_path = ofd.FileName;

pictureBox1.Image = new Bitmap(source_path);

}

private void button3_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox2.Image = null;

target_path = ofd.FileName;

pictureBox2.Image = new Bitmap(target_path);

}

private void button1_Click(object sender, EventArgs e)

{

if (pictureBox1.Image == null)

{

return;

}

button1.Enabled = false;

Application.DoEvents();

Mat source_img = Cv2.ImRead(source_path);

Mat target_img = Cv2.ImRead(target_path);

List<Bbox> boxes;

boxes = detect_face.detect(source_img);

if (boxes.Count == 0)

{

MessageBox.Show("Source中未检测到人脸!");

button1.Enabled = true;

return;

}

//绘图

Cv2.Rectangle(source_img, new OpenCvSharp.Point(boxes[0].xmin, boxes[0].ymin), new OpenCvSharp.Point(boxes[0].xmax, boxes[0].ymax), new Scalar(255, 0, 0), 2);

//显示

pictureBox1.Image = source_img.ToBitmap();

if (pictureBox2.Image == null)

{

return;

}

boxes = detect_face.detect(target_img);

if (boxes.Count == 0)

{

MessageBox.Show("target_img中未检测到人脸!");

button1.Enabled = true;

return;

}

//绘图

Cv2.Rectangle(target_img, new OpenCvSharp.Point(boxes[0].xmin, boxes[0].ymin), new OpenCvSharp.Point(boxes[0].xmax, boxes[0].ymax), new Scalar(255, 0, 0), 2);

//显示

pictureBox2.Image = target_img.ToBitmap();

}

private void Form1_Load(object sender, EventArgs e)

{

detect_face = new Yolov8Face("model/yoloface_8n.onnx");

target_path = "images/target.jpg";

source_path = "images/source.jpg";

pictureBox1.Image = new Bitmap(source_path);

pictureBox2.Image = new Bitmap(target_path);

}

}

}

Yolov8Face.cs

using Microsoft.ML.OnnxRuntime;

using Microsoft.ML.OnnxRuntime.Tensors;

using OpenCvSharp;

using System;

using System.Collections.Generic;

using System.Linq;

namespace FaceFusionSharp

{

internal class Yolov8Face

{

float[] input_image;

int input_height;

int input_width;

float ratio_height;

float ratio_width;

float conf_threshold;

float iou_threshold;

SessionOptions options;

InferenceSession onnx_session;

public Yolov8Face(string modelpath, float conf_thres = 0.5f, float iou_thresh = 0.4f)

{

options = new SessionOptions();

options.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;

options.AppendExecutionProvider_CPU(0);

// 创建推理模型类,读取本地模型文件

onnx_session = new InferenceSession(modelpath, options);

this.input_height = 640;

this.input_width = 640;

conf_threshold = conf_thres;

iou_threshold = iou_thresh;

}

void preprocess(Mat srcimg)

{

int height = srcimg.Rows;

int width = srcimg.Cols;

Mat temp_image = srcimg.Clone();

if (height > input_height || width > input_width)

{

float scale = Math.Min((float)input_height / height, (float)input_width / width);

Size new_size = new Size((int)(width * scale), (int)(height * scale));

Cv2.Resize(srcimg, temp_image, new_size);

}

ratio_height = (float)height / temp_image.Rows;

ratio_width = (float)width / temp_image.Cols;

Mat input_img = new Mat();

Cv2.CopyMakeBorder(temp_image, input_img, 0, input_height - temp_image.Rows, 0, input_width - temp_image.Cols, BorderTypes.Constant, 0);

Mat[] bgrChannels = Cv2.Split(input_img);

for (int c = 0; c < 3; c++)

{

bgrChannels[c].ConvertTo(bgrChannels[c], MatType.CV_32FC1, 1 / 128.0, -127.5 / 128.0);

}

Cv2.Merge(bgrChannels, input_img);

foreach (Mat channel in bgrChannels)

{

channel.Dispose();

}

input_image = Common.ExtractMat(input_img);

input_img.Dispose();

}

public List<Bbox> detect(Mat srcimg)

{

preprocess(srcimg);

Tensor<float> input_tensor = new DenseTensor<float>(input_image, new[] { 1, 3, input_height, input_width });

List<NamedOnnxValue> input_container = new List<NamedOnnxValue>

{

NamedOnnxValue.CreateFromTensor("images", input_tensor)

};

var ort_outputs = onnx_session.Run(input_container).ToArray();

// 形状是(1, 20, 8400),不考虑第0维batchsize,每一列的长度20,前4个元素是检测框坐标(cx,cy,w,h),第4个元素是置信度,剩下的15个元素是5个关键点坐标x,y和置信度

float[] pdata = ort_outputs[0].AsTensor<float>().ToArray();

int num_box = 8400;

List<Bbox> bounding_box_raw = new List<Bbox>();

List<float> score_raw = new List<float>();

for (int i = 0; i < num_box; i++)

{

float score = pdata[4 * num_box + i];

if (score > conf_threshold)

{

float xmin = (float)((pdata[i] - 0.5 * pdata[2 * num_box + i]) * ratio_width); //(cx,cy,w,h)转到(x,y,w,h)并还原到原图

float ymin = (float)((pdata[num_box + i] - 0.5 * pdata[3 * num_box + i]) * ratio_height); //(cx,cy,w,h)转到(x,y,w,h)并还原到原图

float xmax = (float)((pdata[i] + 0.5 * pdata[2 * num_box + i]) * ratio_width); //(cx,cy,w,h)转到(x,y,w,h)并还原到原图

float ymax = (float)((pdata[num_box + i] + 0.5 * pdata[3 * num_box + i]) * ratio_height); //(cx,cy,w,h)转到(x,y,w,h)并还原到原图

//坐标的越界检查保护,可以添加一下

bounding_box_raw.Add(new Bbox(xmin, ymin, xmax, ymax));

score_raw.Add(score);

//剩下的5个关键点坐标的计算,暂时不写,因为在下游的模块里没有用到5个关键点坐标信息

}

}

List<int> keep_inds = Common.nms(bounding_box_raw, score_raw, iou_threshold);

int keep_num = keep_inds.Count();

List<Bbox> boxes = new List<Bbox>();

for (int i = 0; i < keep_num; i++)

{

int ind = keep_inds[i];

boxes.Add(bounding_box_raw[ind]);

}

return boxes;

}

}

}

Bbox.cs

namespace FaceFusionSharp

{

public class Bbox

{

public Bbox(float xmin, float ymin, float xmax, float ymax)

{

this.xmin = xmin;

this.ymin = ymin;

this.xmax = xmax;

this.ymax = ymax;

}

public float xmin { get; set; }

public float ymin { get; set; }

public float xmax { get; set; }

public float ymax { get; set; }

}

}

ExtractMat方法

public static float[] ExtractMat(Mat src)

{

OpenCvSharp.Size size = src.Size();

int channels = src.Channels();

float[] result = new float[size.Width * size.Height * channels];

GCHandle resultHandle = default;

try

{

resultHandle = GCHandle.Alloc(result, GCHandleType.Pinned);

IntPtr resultPtr = resultHandle.AddrOfPinnedObject();

for (int i = 0; i < channels; ++i)

{

Mat cmat = new Mat(

src.Height, src.Width,

MatType.CV_32FC1,

resultPtr + i * size.Width * size.Height * sizeof(float));

Cv2.ExtractChannel(src, cmat, i);

cmat.Dispose();

}

}

finally

{

resultHandle.Free();

}

return result;

}

C++代码

我们顺便看一下C++代码yolov8face的实现,方便对比学习。

yolov8face.h

# ifndef YOLOV8FACE

# define YOLOV8FACE

#include <fstream>

#include <sstream>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

//#include <cuda_provider_factory.h> ///如果使用cuda加速,需要取消注释

#include <onnxruntime_cxx_api.h>

#include"utils.h"

class Yolov8Face

{

public:

Yolov8Face(std::string modelpath, const float conf_thres=0.5, const float iou_thresh=0.4);

void detect(cv::Mat srcimg, std::vector<Bbox> &boxes); ////只返回检测框,置信度和5个关键点这两个信息在后续的模块里没有用到

private:

void preprocess(cv::Mat img);

std::vector<float> input_image;

int input_height;

int input_width;

float ratio_height;

float ratio_width;

float conf_threshold;

float iou_threshold;

Ort::Env env = Ort::Env(ORT_LOGGING_LEVEL_ERROR, "Face Detect");

Ort::Session *ort_session = nullptr;

Ort::SessionOptions sessionOptions = Ort::SessionOptions();

std::vector<char*> input_names;

std::vector<char*> output_names;

std::vector<std::vector<int64_t>> input_node_dims; // >=1 outputs

std::vector<std::vector<int64_t>> output_node_dims; // >=1 outputs

Ort::MemoryInfo memory_info_handler = Ort::MemoryInfo::CreateCpu(OrtDeviceAllocator, OrtMemTypeCPU);

};

#endif

yolov8face.cpp

#include "yolov8face.h"

using namespace cv;

using namespace std;

using namespace Ort;

Yolov8Face::Yolov8Face(string model_path, const float conf_thres, const float iou_thresh)

{

/// OrtStatus* status = OrtSessionOptionsAppendExecutionProvider_CUDA(sessionOptions, 0); ///如果使用cuda加速,需要取消注释

sessionOptions.SetGraphOptimizationLevel(ORT_ENABLE_BASIC);

/// std::wstring widestr = std::wstring(model_path.begin(), model_path.end()); ////windows写法

/// ort_session = new Session(env, widestr.c_str(), sessionOptions); ////windows写法

ort_session = new Session(env, model_path.c_str(), sessionOptions); ////linux写法

size_t numInputNodes = ort_session->GetInputCount();

size_t numOutputNodes = ort_session->GetOutputCount();

AllocatorWithDefaultOptions allocator;

for (int i = 0; i < numInputNodes; i++)

{

input_names.push_back(ort_session->GetInputName(i, allocator)); ///低版本onnxruntime的接口函数

////AllocatedStringPtr input_name_Ptr = ort_session->GetInputNameAllocated(i, allocator); /// 高版本onnxruntime的接口函数

////input_names.push_back(input_name_Ptr.get()); /// 高版本onnxruntime的接口函数

Ort::TypeInfo input_type_info = ort_session->GetInputTypeInfo(i);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

auto input_dims = input_tensor_info.GetShape();

input_node_dims.push_back(input_dims);

}

for (int i = 0; i < numOutputNodes; i++)

{

output_names.push_back(ort_session->GetOutputName(i, allocator)); ///低版本onnxruntime的接口函数

////AllocatedStringPtr output_name_Ptr= ort_session->GetInputNameAllocated(i, allocator);

////output_names.push_back(output_name_Ptr.get()); /// 高版本onnxruntime的接口函数

Ort::TypeInfo output_type_info = ort_session->GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_node_dims.push_back(output_dims);

}

this->input_height = input_node_dims[0][2];

this->input_width = input_node_dims[0][3];

this->conf_threshold = conf_thres;

this->iou_threshold = iou_thresh;

}

void Yolov8Face::preprocess(Mat srcimg)

{

const int height = srcimg.rows;

const int width = srcimg.cols;

Mat temp_image = srcimg.clone();

if (height > this->input_height || width > this->input_width)

{

const float scale = std::min((float)this->input_height / height, (float)this->input_width / width);

Size new_size = Size(int(width * scale), int(height * scale));

resize(srcimg, temp_image, new_size);

}

this->ratio_height = (float)height / temp_image.rows;

this->ratio_width = (float)width / temp_image.cols;

Mat input_img;

copyMakeBorder(temp_image, input_img, 0, this->input_height - temp_image.rows, 0, this->input_width - temp_image.cols, BORDER_CONSTANT, 0);

vector<cv::Mat> bgrChannels(3);

split(input_img, bgrChannels);

for (int c = 0; c < 3; c++)

{

bgrChannels[c].convertTo(bgrChannels[c], CV_32FC1, 1 / 128.0, -127.5 / 128.0);

}

const int image_area = this->input_height * this->input_width;

this->input_image.resize(3 * image_area);

size_t single_chn_size = image_area * sizeof(float);

memcpy(this->input_image.data(), (float *)bgrChannels[0].data, single_chn_size);

memcpy(this->input_image.data() + image_area, (float *)bgrChannels[1].data, single_chn_size);

memcpy(this->input_image.data() + image_area * 2, (float *)bgrChannels[2].data, single_chn_size);

}

////只返回检测框,因为在下游的模块里,置信度和5个关键点这两个信息在后续的模块里没有用到

void Yolov8Face::detect(Mat srcimg, std::vector<Bbox> &boxes)

{

this->preprocess(srcimg);

std::vector<int64_t> input_img_shape = {1, 3, this->input_height, this->input_width};

Value input_tensor_ = Value::CreateTensor<float>(memory_info_handler, this->input_image.data(), this->input_image.size(), input_img_shape.data(), input_img_shape.size());

Ort::RunOptions runOptions;

vector<Value> ort_outputs = this->ort_session->Run(runOptions, this->input_names.data(), &input_tensor_, 1, this->output_names.data(), output_names.size());

float *pdata = ort_outputs[0].GetTensorMutableData<float>(); /// 形状是(1, 20, 8400),不考虑第0维batchsize,每一列的长度20,前4个元素是检测框坐标(cx,cy,w,h),第4个元素是置信度,剩下的15个元素是5个关键点坐标x,y和置信度

const int num_box = ort_outputs[0].GetTensorTypeAndShapeInfo().GetShape()[2];

vector<Bbox> bounding_box_raw;

vector<float> score_raw;

for (int i = 0; i < num_box; i++)

{

const float score = pdata[4 * num_box + i];

if (score > this->conf_threshold)

{

float xmin = (pdata[i] - 0.5 * pdata[2 * num_box + i]) * this->ratio_width; ///(cx,cy,w,h)转到(x,y,w,h)并还原到原图

float ymin = (pdata[num_box + i] - 0.5 * pdata[3 * num_box + i]) * this->ratio_height; ///(cx,cy,w,h)转到(x,y,w,h)并还原到原图

float xmax = (pdata[i] + 0.5 * pdata[2 * num_box + i]) * this->ratio_width; ///(cx,cy,w,h)转到(x,y,w,h)并还原到原图

float ymax = (pdata[num_box + i] + 0.5 * pdata[3 * num_box + i]) * this->ratio_height; ///(cx,cy,w,h)转到(x,y,w,h)并还原到原图

////坐标的越界检查保护,可以添加一下

bounding_box_raw.emplace_back(Bbox{xmin, ymin, xmax, ymax});

score_raw.emplace_back(score);

/// 剩下的5个关键点坐标的计算,暂时不写,因为在下游的模块里没有用到5个关键点坐标信息

}

}

vector<int> keep_inds = nms(bounding_box_raw, score_raw, this->iou_threshold);

const int keep_num = keep_inds.size();

boxes.clear();

boxes.resize(keep_num);

for (int i = 0; i < keep_num; i++)

{

const int ind = keep_inds[i];

boxes[i] = bounding_box_raw[ind];

}

}

闽公网安备 35020302035485号

闽公网安备 35020302035485号

闽公网安备 35020302035485号

闽公网安备 35020302035485号