- 联系我们

- duidaima.com 版权声明

- 闽ICP备2020021581号

-

闽公网安备 35020302035485号

闽公网安备 35020302035485号

闽公网安备 35020302035485号

闽公网安备 35020302035485号

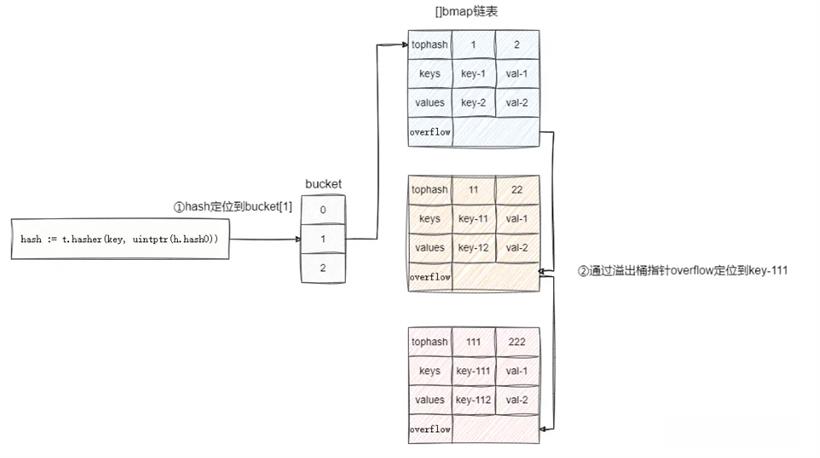

很明显,如果极端情况下因为有限的桶导致大量的冲突就很可能使map元素定位的时间复杂度退化为O(n),所以我们需要重新计算哈希值以及对桶进行扩容,从而解决极端的哈希冲突场景。

自此我们完成了扩容操作,go语言中的map为了避免扩容后大量迁移导致的计算耗时,对于旧有的bucket元素采用渐进式再哈希的方式进行迁移。 所以后续我们在进行相同的写入操作时,若发现这个桶正处于扩容状态,那么它就会计算当前桶中每个元素的新位置,然后一次性进行驱逐。

func main() {

m := make(map[int]string)

for i := 0; i < 9; i++ {

m[i] = "xiaoming"

}

}

上述这段代码经过编译之后,实际调用的是mapassign_fast64这个方法:0x0092 00146 (F:\github\awesomeProject\main.go:10) CALL runtime.mapassign_fast64(SB)这里笔者就贴出这段代码的核心片段,从核心代码可以看出当map符合进行扩容的条件时会调用hashGrow进行扩容,再通过goto again到again对应代码段进行驱逐操作:

func mapassign_fast64(t *maptype, h *hmap, key uint64) unsafe.Pointer {

// ......

again:

bucket := hash & bucketMask(h.B)

if h.growing() {

growWork_fast64(t, h, bucket)

}

b := (*bmap)(add(h.buckets, bucket*uintptr(t.bucketsize)))

var insertb *bmap

var inserti uintptr

var insertk unsafe.Pointer

// ......

//如果map未进行扩容,且当前map负载超过最大值(默认6.5)或者溢出桶数量超过了bucket数量则进行扩容

if !h.growing() && (overLoadFactor(h.count+1, h.B) || tooManyOverflowBuckets(h.noverflow, h.B)) {

hashGrow(t, h)

goto again // Growing the table invalidates everything, so try again

}

// ......

}

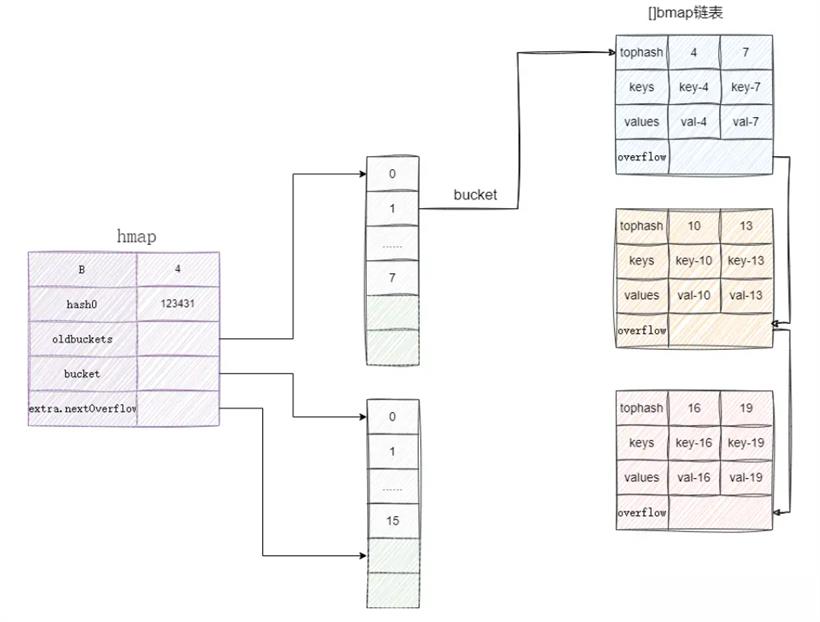

hashGrow的扩容逻辑和上图的流程差不多,读者可以基于笔者给出的核心注释进行了解:func hashGrow(t *maptype, h *hmap) {

//默认B的值是+1

bigger := uint8(1)

//oldbuckets 记录现在的bucket

oldbuckets := h.buckets

//创建一个新的bucket和溢出桶

newbuckets, nextOverflow := makeBucketArray(t, h.B+bigger, nil)

//更新b、oldbuckets 指针指向原有bucket、buckets 指向新创建的bucket

h.B += bigger

h.flags = flags

h.oldbuckets = oldbuckets

h.buckets = newbuckets

h.nevacuate = 0

h.noverflow = 0

//如果原有的bucket还有空闲溢出桶,则记录到h.extra.oldoverflow指针上

if h.extra != nil && h.extra.overflow != nil {

// Promote current overflow buckets to the old generation.

if h.extra.oldoverflow != nil {

throw("oldoverflow is not nil")

}

h.extra.oldoverflow = h.extra.overflow

h.extra.overflow = nil

}

//如果刚刚有新创建的溢出桶,则用h.extra.nextOverflow指针进行管理

if nextOverflow != nil {

if h.extra == nil {

h.extra = new(mapextra)

}

h.extra.nextOverflow = nextOverflow

}

}

进行修改操作时会触发的again代码段的growWork_fast64方法其内部就涉及了驱逐操作方法evacuate_fast64方法,因为有了上文的图解,所以对于这段代码的解读就很容易了,我们直接查看evacuate_fast64的核心注释即可了解驱逐操作的含义:func growWork_fast64(t *maptype, h *hmap, bucket uintptr) {

//将对应哈希的oldbucket的元素驱逐到新bucket上

evacuate_fast64(t, h, bucket&h.oldbucketmask())

//......

}

func evacuate_fast64(t *maptype, h *hmap, oldbucket uintptr) {

//获取旧的bucket的指针

b := (*bmap)(add(h.oldbuckets, oldbucket*uintptr(t.bucketsize)))

//......

//通过[2]evacDst数组的0索引记录oldbucket的bucket、keys、values指针,1记录new buckets的bucket、keys、values指针

if !evacuated(b) {

var xy [2]evacDst

x := &xy[0]

//记录oldbucket的bucket、keys、values指针

x.b = (*bmap)(add(h.buckets, oldbucket*uintptr(t.bucketsize)))

x.k = add(unsafe.Pointer(x.b), dataOffset)

x.e = add(x.k, bucketCnt*8)

//new buckets的bucket、keys、values指针

if !h.sameSizeGrow() {

// Only calculate y pointers if we're growing bigger.

// Otherwise GC can see bad pointers.

y := &xy[1]

y.b = (*bmap)(add(h.buckets, (oldbucket+newbit)*uintptr(t.bucketsize)))

y.k = add(unsafe.Pointer(y.b), dataOffset)

y.e = add(y.k, bucketCnt*8)

}

//循环遍历非空的tophash进行驱逐操作

for ; b != nil; b = b.overflow(t) {

//获取在old bucket上的地址

k := add(unsafe.Pointer(b), dataOffset)

e := add(k, bucketCnt*8)

for i := 0; i < bucketCnt; i, k, e = i+1, add(k, 8), add(e, uintptr(t.elemsize)) {

//计算在旧的bucket的tophash以定位键值对

top := b.tophash[i]

if isEmpty(top) {

b.tophash[i] = evacuatedEmpty

continue

}

if top < minTopHash {

throw("bad map state")

}

//计算在新的bucket上的hash值

var useY uint8

if !h.sameSizeGrow() {

// Compute hash to make our evacuation decision (whether we need

// to send this key/elem to bucket x or bucket y).

hash := t.hasher(k, uintptr(h.hash0))

if hash&newbit != 0 {

useY = 1

}

}

b.tophash[i] = evacuatedX + useY // evacuatedX + 1 == evacuatedY, enforced in makemap

//获取新的bucket的指针地址

dst := &xy[useY] // evacuation destination

// 堆代码 duidaima.com

//到新bucke的tophash数组上的位置记录这个tophash

dst.b.tophash[dst.i&(bucketCnt-1)] = top // mask dst.i as an optimization, to avoid a bounds check

//将旧的bucket的key复制到新bucket上

if t.key.ptrdata != 0 && writeBarrier.enabled {

if goarch.PtrSize == 8 {

// 通过指针操作,将旧的key复制到新的bucket的key上

*(*unsafe.Pointer)(dst.k) = *(*unsafe.Pointer)(k)

} else {

// There are three ways to squeeze at least one 32 bit pointer into 64 bits.

// Give up and call typedmemmove.

typedmemmove(t.key, dst.k, k)

}

} else {

*(*uint64)(dst.k) = *(*uint64)(k)

}

//复制value到新bucket的位置上

typedmemmove(t.elem, dst.e, e)

//....

}

}

// 完成后删除旧的bucket的键值对,辅助GC

if h.flags&oldIterator == 0 && t.bucket.ptrdata != 0 {

b := add(h.oldbuckets, oldbucket*uintptr(t.bucketsize))

// Preserve b.tophash because the evacuation

// state is maintained there.

ptr := add(b, dataOffset)

n := uintptr(t.bucketsize) - dataOffset

memclrHasPointers(ptr, n)

}

}

if oldbucket == h.nevacuate {

advanceEvacuationMark(h, t, newbit)

}

}

经过这段代码之后,我们的旧的bucket上的桶的数据也就都驱逐完成了,随后跳出这些代码段,再次回到mapassign_fast64的赋值操作的代码找到合适key的位置设置键值对。func mapassign_fast64(t *maptype, h *hmap, key uint64) unsafe.Pointer {

//......

//定位到key的指针

bucketloop:

for {

for i := uintptr(0); i < bucketCnt; i++ {

//若定位到的bucket为nil,则说明这个位置没有被使用过,则进入该分支定位指针

if isEmpty(b.tophash[i]) {

if insertb == nil {

insertb = b

inserti = i

}

//如果找到当前tophash为0值,说明这个位置没有被用过,直接退出循环,到外部直接赋值

if b.tophash[i] == emptyRest {

break bucketloop

}

continue

}

//获取k判断和当前key值是否一致,若不一致的continue,反之记录直接到done代码段,直接设置value的值

k := *((*uint64)(add(unsafe.Pointer(b), dataOffset+i*8)))

if k != key {

continue

}

insertb = b

inserti = i

goto done

}

//.....

}

//定位到key位置设置key

insertk = add(unsafe.Pointer(insertb), dataOffset+inserti*8)

// store new key at insert position

*(*uint64)(insertk) = key

//元素数量+1

h.count++

done:

//设置value

elem := add(unsafe.Pointer(insertb), dataOffset+bucketCnt*8+inserti*uintptr(t.elemsize))

if h.flags&hashWriting == 0 {

fatal("concurrent map writes")

}

h.flags &^= hashWriting

return elem

}

扩容未完成时如何读func mapaccess1(t *maptype, h *hmap, key unsafe.Pointer) unsafe.Pointer {

//......

//定位hash值

hash := t.hasher(key, uintptr(h.hash0))

//先定位到bucket指针

m := bucketMask(h.B)

b := (*bmap)(add(h.buckets, (hash&m)*uintptr(t.bucketsize)))

//在基于hash到oldbucket定位旧的bucket指针,若未发生驱逐则上b指针指向oldbucket

if c := h.oldbuckets; c != nil {

if !h.sameSizeGrow() {

// There used to be half as many buckets; mask down one more power of two.

m >>= 1

}

oldb := (*bmap)(add(c, (hash&m)*uintptr(t.bucketsize)))

//若未发生驱逐则上b指针指向oldbucket

if !evacuated(oldb) {

b = oldb

}

}

top := tophash(hash)

bucketloop:

//循环定位键值对

for ; b != nil; b = b.overflow(t) {

for i := uintptr(0); i < bucketCnt; i++ {

//......

//如果找到的key和我们要查询的key相等则直接返回

if t.key.equal(key, k) {

e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize))

if t.indirectelem() {

e = *((*unsafe.Pointer)(e))

}

return e

}

}

}

return unsafe.Pointer(&zeroVal[0])

}

小结