以下环境来自于近两天的真实案例,关键地方已经做了脱敏。

1.现场环境如下

两台DELL R930服务器光纤直连一台联想V5030存储(OEM IBM的V5000),做的oracle rac,数据库版本是11.2.0.4。存储是按冗余路径的接法,配置了multipath。

2.客户反馈故障现象

据客户说现像已经持续了3个月,几乎每天都要坏一次,之前找过存储厂商的人来看说存储没问题,接口的运营商找到了几波高手过来蹲点半个月也没发现问题。客户只能每天把这套RAC的主机和存储全部断电,再开机就好了。这现象听起来诡异不诡异?

3.推理过程

我一听这么多大拿都解决不了,我来不也得歇菜?抱着忐忑的心态,我就来看看,以下是回溯故障前一天的日志(最近几个月的故障完 全一致)。处理问题先要收集好详细信息:与客户做好访谈,确定有最近没有改动,客户发现的故障时间,状态,记录好各个客户反馈的时间点。再去收集相关的日志,按时间点前后进行详细排查,最好能实际经历故障发生。

按客户说的,故障发生时,应用报错TNS监听错误,无法连到数据库,那就先来看看数据库的ALERT日志咋显示的。我看了两个节点的数据库alert日志,除了正常的日志切换,就是ASM报错,而且时间惊奇的一致:都是同一时间显示ASM实例无法连接,库挂掉了。

Tue Sep 03 09:21:16 2024

NOTE: ASMB terminating

Errors in file /u01/app/oracle/diag/rdbms/db/db2/trace/db2_asmb_11004.trc:

ORA-15064: communication failure with ASM instance

ORA-03113: end-of-file on communication channel

Process ID:

Session ID: 2047 Serial number: 3

Errors in file /u01/app/oracle/diag/rdbms/db/db2/trace/db2_asmb_11004.trc:

ORA-15064: communication failure with ASM instance

ORA-03113: end-of-file on communication channel

Process ID:

Session ID: 2047 Serial number: 3

ASMB (ospid: 11004): terminating the instance due to error 15064

Termination issued to instance processes. Waiting for the processes to exit

Tue Sep 03 09:21:26 2024

Instance termination failed to kill one or more processes

Instance terminated by ASMB, pid = 11004

那么ASM实例为啥挂掉呢。我们再来看看GRID用户下$ORACLE_HOME/logs/nodename/下的告警日志,问题挺明显,OCR磁盘组挂了,多次尝试仍然挂载不到磁盘。

2024-09-03 09:19:34.253:

[cssd(9014)]CRS-1615:No I/O has completed after 50% of the maximum interval. Voting file /dev/mapper/ocr2 will be considered not functional in 99880 milliseconds

2024-09-03 09:19:35.254:

[cssd(9014)]CRS-1615:No I/O has completed after 50% of the maximum interval. Voting file /dev/mapper/ocr1 will be considered not functional in 99170 milliseconds

2024-09-03 09:19:35.254:

[cssd(9014)]CRS-1615:No I/O has completed after 50% of the maximum interval. Voting file /dev/mapper/ocr3 will be considered not functional in 99170 milliseconds

2024-09-03 09:20:03.816:

[crsd(9955)]CRS-2765:Resource 'ora.OCR.dg' has failed on server 'db02'.

2024-09-03 09:20:03.904:

[crsd(9955)]CRS-2878:Failed to restart resource 'ora.OCR.dg'

2024-09-03 09:20:03.905:

[crsd(9955)]CRS-2769:Unable to failover resource 'ora.OCR.dg'.

2024-09-03 09:20:24.465:

[cssd(9014)]CRS-1614:No I/O has completed after 75% of the maximum interval. Voting file /dev/mapper/ocr1 will be considered not functional in 49960 milliseconds

2024-09-03 09:20:24.466:

[cssd(9014)]CRS-1614:No I/O has completed after 75% of the maximum interval. Voting file /dev/mapper/ocr2 will be considered not functional in 49670 milliseconds

2024-09-03 09:20:24.466:

[cssd(9014)]CRS-1614:No I/O has completed after 75% of the maximum interval. Voting file /dev/mapper/ocr3 will be considered not functional in 49960 milliseconds

2024-09-03 09:20:54.757:

[cssd(9014)]CRS-1613:No I/O has completed after 90% of the maximum interval. Voting file /dev/mapper/ocr1 will be considered not functional in 19670 milliseconds

2024-09-03 09:20:54.757:

[cssd(9014)]CRS-1613:No I/O has completed after 90% of the maximum interval. Voting file /dev/mapper/ocr2 will be considered not functional in 19380 milliseconds

2024-09-03 09:20:54.757:

[cssd(9014)]CRS-1613:No I/O has completed after 90% of the maximum interval. Voting file /dev/mapper/ocr3 will be considered not functional in 19670 milliseconds

我们再往前推一推,看看这个时间附近,操作系统发生了什么,查看一下/var/log/message日志。现象很明显,HBA卡先是全部掉线,随后又恢复一个,这里磁盘用的都是multipath,双路径,按说挂1路也不会有问题。

主机1

Sep 3 09:17:54 db01 kernel: [86146.819752] qla2xxx [0000:0c:00.0]-500b:0: LOOP DOWN detected (2 3 0 0).

Sep 3 09:17:56 db01 kernel: [86148.145279] qla2xxx [0000:41:00.0]-500b:2: LOOP DOWN detected (2 3 0 0).

Sep 3 09:18:27 db01 kernel: [86179.012960] qla2xxx [0000:0c:00.0]-500a:0: LOOP UP detected (8 Gbps).

主机2

Sep 3 09:17:54 db02 kernel: [86146.819752] qla2xxx [0000:0c:00.0]-500b:0: LOOP DOWN detected (2 3 0 0).

Sep 3 09:17:56 db02 kernel: [86148.145279] qla2xxx [0000:41:00.0]-500b:2: LOOP DOWN detected (2 3 0 0).

Sep 3 09:18:27 db02 kernel: [86179.012960] qla2xxx [0000:0c:00.0]-500a:0: LOOP UP detected (8 Gbps).

但是此时检查multipath,显示路径全部丢失,随后触发RAC的机制,两台服务器都reboot了,但是reboot之后,仍然找不到存储。这时候就是客户说的每天现像,于是客户就去把设备都关了再开机。说到这里估计小伙伴们都能知道,这肯定是存储有毛病了啊,都识别不到存储这还不明显。但客户说找存储厂商的人排查过存储没问题,再说存储也没故障报警灯,而且他们每次故障都是先断存储的电,问我有没有可能是这个原因导致报HBA卡掉线的?

看客户如此说法,我说把存储的管理信息提供一下,我先分析一下存储的日志,顺便就等一下故障出现,看看什么现象不就完了。于是去存储上检查了一下历史事件,确实是没有报警,但是很可疑,显示了节点冷启动。

下载了系统日志,打开查看一下,解压snap日志包之后,找到snap.7XXXXX-1.240905.092325\dumps\elogs日志(这里说一下,检查了存储的时间比生产服务器慢了1个小时多)

# 堆代码 duidaima.com

Error Log Entry 6270

Node Identifier : node2

Object Type : node

Object ID : 1

Copy ID :

Sequence Number : 0

Root Sequence Number : 0

First Error Timestamp : Tue Sep 3 08:14:06 2024

: Epoch + 1725322446

Last Error Timestamp : Tue Sep 3 08:14:06 2024

: Epoch + 1725322446

Fixed Timestamp :

Error Count : 1

Error ID : 990245 : Node shutdown imminent

Error Code :

Status Flag(s) : EVENT

Status Flag(s) hex : 0x1

Type Flag : CONFIGURATION EVENT

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

这明显是存储出现了问题啊!

4.故障实锤

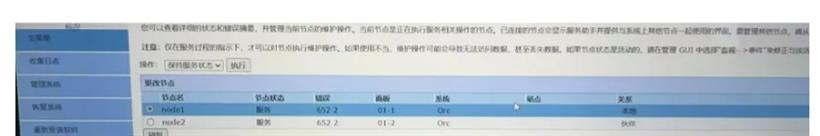

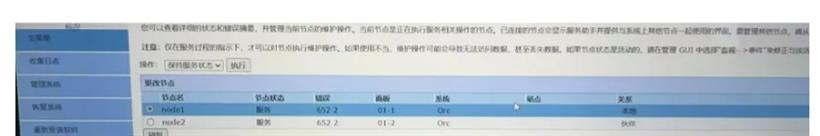

于是开是监控系统message日志,直到故障现像出现,显示HBA卡掉线,我立即冲到机房一看,果然存储其中1个控制器上的HBA卡灯全灭了,该控制器也变成了红色状态,随后另一个控制器节点也灭了,变成红灯。登录进存储维护界面,发现节点状态都变成了服务,且执行退出出无法恢复。

这就是故障的真实原因了,存储有问题,自动重启控制器,且重启完控制器处于维护模式无法退出,导致主机无法识别存储。

5.最后

从当前状况看,问题出现在存储这是无疑了,但是存储平时运行没有故障红灯这就很奇怪。问了下专业人事,怀疑有几种可能:

1、存储的背板有故障,导致两个控制器重启。

2、电池没电了导致控制器无法开机(这个虽然日志里有显示The estimated capacity loss of 739 mAh, based on Powered-On-Hours data, takes the maximum usable capacity of 1000 mAh below that required for a single FHD, the battery is therefore regarded as being at the end of its life)但是并没有告警。

3、可能是固件版本有BUG,导致集群出问题,这种比较常见,需要做存储T3恢复集群历史配置,稍微有点风险,但是存储早已经过保了,咱也不敢干。

后续问题就让客户自己决定是修存储还是换设备,毕竟这个存储已经运行7年多了。还有让我赞叹的就是,这套系统经历了90天的断电!断电!数据库几个T压根没开归档,最后数据也没丢,存储还能对付用,只能是感叹老外的设备和软件都挺抗祸祸

闽公网安备 35020302035485号

闽公网安备 35020302035485号

闽公网安备 35020302035485号

闽公网安备 35020302035485号