- 联系我们

- duidaima.com 版权声明

- 闽ICP备2020021581号

-

闽公网安备 35020302035485号

闽公网安备 35020302035485号

闽公网安备 35020302035485号

闽公网安备 35020302035485号

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>堆代码 duidaima.com</title>

</head>

<body>

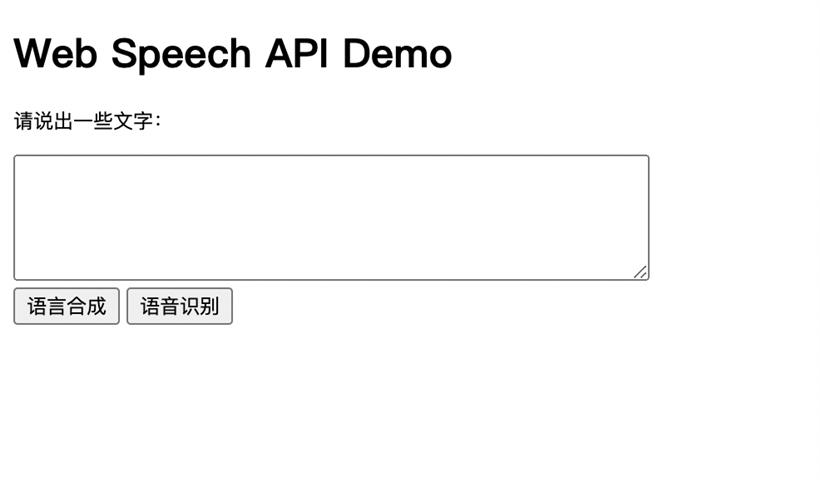

<h1>Web Speech API Demo</h1>

<p>请说出一些文字:</p>

<textarea id="input" cols="50" rows="5"></textarea>

<br>

<button id="speakBtn">语言合成</button>

<button id="transcribeBtn">语音识别</button>

<br>

<p id="transcription"></p>

<script>

const recognition = new webkitSpeechRecognition(); // 实例化语音识别对象

recognition.continuous = true; // 连续识别,直到 stop() 被调用

const transcribeBtn = document.getElementById('transcribeBtn');

transcribeBtn.addEventListener('click', function() {

recognition.start(); // 开始语音识别

});

recognition.onresult = function(event) {

let result = '';

for (let i = event.resultIndex; i < event.results.length; i++) {

result += event.results[i][0].transcript;

}

const transcript = document.getElementById('transcription');

transcript.innerHTML = result; // 显示语音识别结果

};

const speakBtn = document.getElementById('speakBtn');

speakBtn.addEventListener('click', function() {

const text = document.getElementById('input').value; // 获取文本框中的文本

const msg = new SpeechSynthesisUtterance(text); // 实例化语音合成对象

window.speechSynthesis.speak(msg); // 开始语音合成

});

</script>

</body>

</html>

这个例子很简单,点击语音识别可以将文字识别再文本框中。输入文字,电脑可以合成语音, 但是电脑合成的声音比较机械,不够逼真,因此我们可以使用微软的语音合成,大家可以访问这个地址体验。

https://speech.microsoft.com/audiocontentcreation

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<title>堆代码 duidaima.com</title>

</head>

<body>

<h1>Web Speech API + ChatGPT API</h1>

<button id="transcribeBtn">按住说话</button>

<br />

<p id="transcription"></p>

<script src="https://aka.ms/csspeech/jsbrowserpackageraw"></script>

<script>

async function requestOpenAI(content) {

const BASE_URL = ``

const OPENAI_API_KEY = 'sk-xxxxx'

const messages = [

{

role: 'system',

content: 'You are a helpful assistant',

},

{ role: 'user', content },

]

const res = await fetch(

`${BASE_URL || 'https://api.openai.com'}/v1/chat/completions`,

{

method: 'POST',

headers: {

'Content-Type': 'application/json',

authorization: `Bearer ${OPENAI_API_KEY}`,

},

body: JSON.stringify({

model: 'gpt-3.5-turbo',

messages,

temperature: 0.7,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0,

}),

}

)

const response = await res.json()

const result = response.choices[0].message.content

console.log(result)

return result

}

// 下载 mp3 文件

function download(result) {

const blob = new Blob([result.audioData])

const url = URL.createObjectURL(blob)

const link = document.createElement('a')

link.href = url

link.download = 'filename.mp3' // set the filename here

document.body.appendChild(link)

link.click()

document.body.removeChild(link)

URL.revokeObjectURL(url)

}

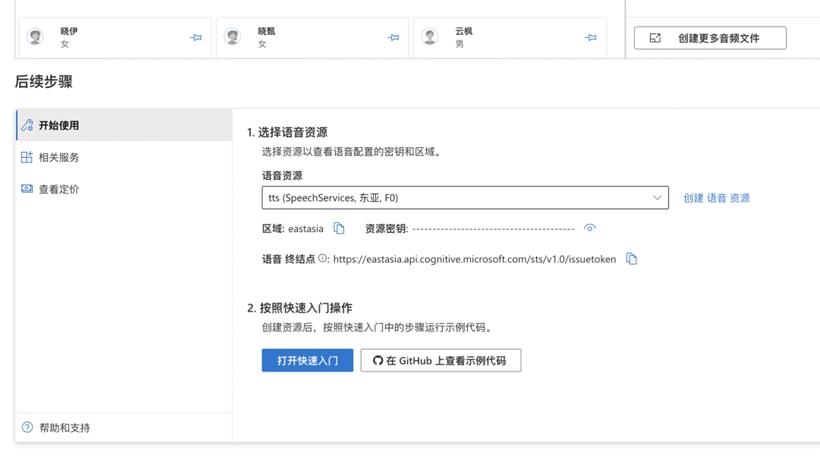

function synthesizeSpeech(text) {

const sdk = SpeechSDK

const speechConfig = sdk.SpeechConfig.fromSubscription(

'TTS_KEY',

'TTS_REGION'

)

const audioConfig = sdk.AudioConfig.fromDefaultSpeakerOutput()

const speechSynthesizer = new SpeechSDK.SpeechSynthesizer(

speechConfig,

audioConfig

)

// 可以更改 Ssml 来改变声音

speechSynthesizer.speakSsmlAsync(

`<speak xmlns="http://www.w3.org/2001/10/synthesis" xmlns:mstts="http://www.w3.org/2001/mstts" xmlns:emo="http://www.w3.org/2009/10/emotionml" version="1.0" xml:lang="zh-CN"><voice name="zh-CN-XiaoxiaoNeural">${text}</voice></speak>`,

(result) => {

if (result) {

speechSynthesizer.close()

return result.audioData

}

},

(error) => {

console.log(error)

speechSynthesizer.close()

}

)

}

const SpeechRecognition =

window.SpeechRecognition || webkitSpeechRecognition

const recognition = new SpeechRecognition() // 实例化语音识别对象

recognition.continuous = true // 连续识别,直到 stop() 被调用

recognition.lang = 'cmn-Hans-CN' // 普通话 (中国大陆)

const transcribeBtn = document.getElementById('transcribeBtn')

let record = false

transcribeBtn.addEventListener('mousedown', function () {

record = true

recognition.start() // 开始语音识别

console.log('开始语音识别')

transcribeBtn.textContent = '正在录音...'

})

transcribeBtn.addEventListener('mouseup', function () {

transcribeBtn.textContent = '按住说话'

record = false

recognition.stop()

})

recognition.onend = () => {

console.log('停止语音识别')

transcribeBtn.textContent = '开始'

record = false

}

recognition.onerror = function (event) {

console.log(event.error)

}

recognition.onresult = function (event) {

console.log(event)

let result = ''

for (let i = event.resultIndex; i < event.results.length; i++) {

result += event.results[i][0].transcript

}

console.log(result)

const transcript = document.getElementById('transcription')

const p = document.createElement('p')

p.textContent = result

transcript.appendChild(p) // 显示语音识别结果

requestOpenAI(result).then((res) => {

const p = document.createElement('p')

p.textContent = res

transcript.appendChild(p)

synthesizeSpeech(res)

})

}

</script>

</body>

</html>

上面代码中: